Goal

Mainly people work from within whatever framework has been built at their work.This has the disadvantage that it is hard to try new techniques or imagine how new types of tests could be integrated. Furthermore, most frameworks have layer upon layer of abstration, so I made a repo that is meant as a playground of sorts. I wanted to split the task of learning in two, separate the how from the complexity of a full framework. This is meant to explore how to write automated tests against an incredibly simple system so that each test is more easily manipulated and can be held “in memory” more easily. These are not unit tests but instead integration API level tests.

Link to repo

Structure of repo:

toy_example

app.py

database_creation.py

requirements_toy_example.txt

README.md

tests

load_testing_file.py

test_faker.py

test_property_based.py

Getting Started

Create a virtual environment

Run the requirements_toy_example.txt

Open the database_creation.py file and run the create_db_table function

Run the Flask app in app.py

test_faker.py and test_property_based.py are both meant to run from PyTest

load_testing_file.py is meant to run from Locust framework (more info later)

The App

The application under test is a very simple Flask based API backed by an equal simple SQLite database. The routes defined in the app.py are basic CRUD (Create, Read, Update, and Delete) type functionality. The subject matter is comic book artists. The only information that can be passed in are:

First name

Last name

Birth year

Primary key is an integer that iterate upwards

All calls are made to the same local endpoint:

http://127.0.0.1:5000/artistsThe basic functions available are:

Get (show all records available)

Get by ID (show a specific record, based on the primary key)

Post (insert a new record into database)

Put (modify an existing record, requires primary key id)

Delete (remove a record)

Each of these map to a specific database function on a pretty close to 1-to-1 relationship. This setup is pretty similar to our own API at Broadsign. Almost every call that ATS is making to achieve our goals fits into one of these categories (with a few exceptions).

The idea is to make such a simple application that is not going to require more than a few minutes to understand what is going on and no stored functions that require lots of mental space to parse out. There is only 1 table right now and it is a dead simple table with very little data.

Regression Testing (Faker tests)

The main use case of writing tests with Faker library is to ensure that when given reasonable data that the app continues to perform the expected actions. These are the majority of the tests we run in ATS, we have established a specification and written a test to make sure that spec continues to be true over time as we change certain parts of DML. For these test we follow the format Arrange, Act, and Assert. Essentially we are giving structural information about what is under test, and what is merely incidental code. This is a common theme in BDD (Behaviour-driven-development) and sometimes uses the terms given-when-then from the Gherkin specification.

Each test has a specific state that it wants to push the system into (arrange). After which it will then perform a change to the system (act). At which point it will collect data about how the system responds (assert).

Ideally these tests should not be written with a lot of tie-ins to a specific implementation detail or if they need to be it should be abstracted out to a single method so when it changes we only need to change one place. These tests should be as fast as possible, however, the fastest tests will also generally cover the least code. A full end-to-end test will be slow and require lots of network activity, database writes, and provide lots of places for items not under test to cause false fails. So we need to strike a balance when writing these types of tests.

Here is a link to common fake data that the Faker library can provide. Generally where we are using this mostly is to create reasonable first and last names. This will not stress the system but it will ensure that “normal” data input does not cause the system to enter a bad state. Here is an example of how we generate a fake first name:

fake = faker.Faker()

first_name = fake.first_name()I have stuck that code into the method create_faker_artistand that will then feed a JSON like object back to used in a REST call. After each call I check that it returns a 200 status code, which indicates that it completed successfully. The simplest test is test_insert, I provide all the required info in a hardcoded format, just for ease of inspection. The next simplest is test_list_all, which simply does a GET against the endpoint and returns everything in database. After that we actually start using the Faker library, we test_insert_fakerwhich will grab two fake name (first and last) and a random birth year somewhere between 1850 and 2022. I was pretty flexible in how I treat the birth year info because JSON treats it like a string anyways and sqlite does not really care about what data type it is either.

Now we move on to the more complicated tests, test_search_by_id, test_update_artist, and test_delete_artist. All of these require the creation of a specific object so that it can later be manipulated. For the search test I need to create a new comic book artist in order to search for it. So I start with a POST (create) and then I make a search call (GET) based on the ID returned by the POST. Then I assert that the object I sent in the POST (create) matches what was returned by the GET (search call). Updating and deleting are basically the same steps just instead of using a GET I do a PUT and a DELETE.

Exploration Tests (Hypothesis)

The hypothesis library offers a lot of options for creating random data. The basic premise is that you feed in a recipe defined using the @given decorator. The variable that you want to pass in (or inherit) for the test is the first variable passed in (test_first or test_last in this case). Then you define whether it is text or integer or something else, read more about it in this article here. Once you define the scope of what is acceptable input PyTest will then run dozens, hundreds, or even thousands of times. The number of times it runs and how long it will wait are controlled with the @settings decorator, with max_examples and deadline variables passed in. Each run is stored in a sub-folder and fails will be re-tried until the minimum version of what is causing the problem is found, you can learn more in this Pycon talk.

So now that we have looked at the headers and given a short idea of what this does, let’s tackle the why. We know that random data is not going to hit the exact sweet spot to cause a bug every time. So why are we only feeding in random data to our automation? The goal of Hypothesis is to find the exact right random data in the shortest amount of time possible. So it keeps track of what it has tried before and tries something else next time. Given that we can run this thousands and thousands of times it is clearly going to be most effective when the test is incredibly short, like in the milliseconds range, not in the minutes range. So this is a tool that will help us if we are making calls against an API or feeding unit-test level inputs into a class. Not something that we want to use while setting up a BSP to run for 30-90 seconds per test iteration. So why bother with this kind of test? It allows us to make sure there are no hidden gotchas hiding somewhere in the pipeline and we then do more minimal type tests at the longer and more costly level of involving BSP, BSS, and all the networking in between. It also means that we do not need to think of every case and write up a specific test for that input. We allow the computer to do all the drudge work and iterate over every possible input because that is what it is good at! We might not find very many new bugs with this method but it is a new tool in our arsenal that allows us to state with confidence that a feature will not break in this way. And it allows us to free up time in our head space to concentrate on other types of testing.

The actual mechanics of the test are very simple, after I define what to feed in I call insert_artist which accepts either first or last name. The json that will be feed into the API has two key-value pairs that can be overwritten, either first or last name. Depending on what value you pass in the hypothesis generated value will either be fed into one of those key-value pairs. Failure in this case is anything other than a statue code of 200. If we created a few helper functions for these types of tests we could cover the majority of our API calls in relatively few tests, each of which would differ very little. I don’t know if that is the most effective use of our time but as we continue to add new API calls I think this type of testing might be a good sanity check before we release them to the wild. There are other libraries that make use of hypothesis and swagger like this one. The goal here should be that we don’t need to know about and exhaustively test for every end state, we simply define the bounds and let the computer iterate through the somewhat limitless options.

Load Testing (Locust)

The goal of this library (and type of test) is to put strain on your end-points and see if they continue to return the right data. In this case the “right data” is mostly being determined as a 200 status code. This test cannot be easily run by pytest and the outcome of the test is generally not an assert type answer. We try different levels of stress and see if the code is capable of responding. Each iteration or change can then be re-tested to make sure nothing we change has a negative performance impact.

Example command line to run the file:

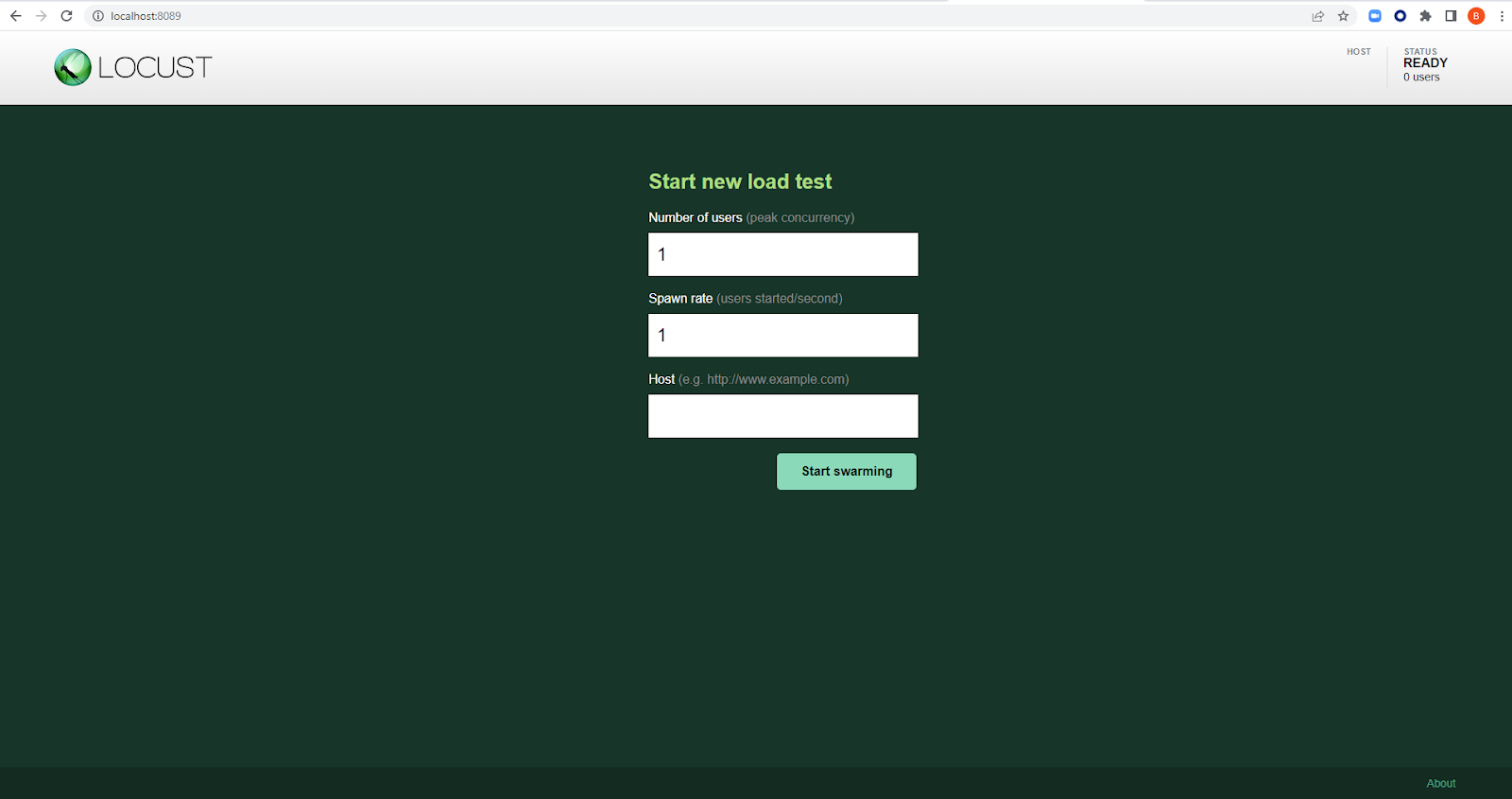

locust -f .\toy_example\tests\load_testing_file.pyThis will spawn a web interface at localhost:8089 that allows you to control what is happening as well as see metrics graphed (and outputted if you desire).

Web Interface:

This is the main screen, it is where you indicate how many threads you want to have active and how quickly you want them to spawn. Also allows you to pass in a host endpoint. I am not using the functionality since everything is hardcoded but it exists.

Once you have created a new test run you will be presented with some output in a data table that looks like this:

Generally, the most important info is coming from the outlier data. So 99% or max but it will depend on what info you are looking for. We can also graph the outcome by clicking on the charts tab in the top left. Which will output something that looks like this:

In terms of the code there are a few items to note:

class ArtistSpawn(HttpUser):This is where the actual requesting code inherits from, under the hood it is basically just the requests library but with some layers to help with threading and usage in the framework. If we wanted to swap it out for gRPC we could do so very easily but inheriting from a different class of requestor.

wait_time = between(1, 3)The wait time defines how much time there should be between calls on each of the threads. If we set it down to 0 then it will make requests as fast as possible. This is set to give 1 to 3 seconds between requests.

def on_start(self):

fake = faker.Faker()

self.first_name = fake.first_name()

self.last_name = fake.last_name()

self.birth_year = random.randint(1850, 2022)This is called once at the start of the run. The goal here is to generate fake data to pass into the insert call. We could hard-code it but this way we get a slight bit of variability so we can look at the database and see patterns if we want.

@task(1)This defines how frequently each method should be run. In this case 1 time. When contrasted with task(3)it will run once for every 3 times the other functions runs. So the inserts should only happen 1 out of 4 requests on any given thread.

The two functions are a basic insert and get call (returns all artists). We can define any behaviour we want so long as the base class we are inheriting from supports it. Then the threading library gevent will spam whatever we choose at our endpoint.